A vulnerability in the way AI browser assistants handle URL fragments opens doors for malicious attacks.

For years, we’ve seen AI take center stage across various industries, revolutionizing everything from customer service to medical diagnostics. Now, these systems are finding their way into our web browsing experiences, thanks to the integration of intelligent browsers within personal devices. However, as with any technology that interacts with data and controls, a new danger has emerged: HashJack.

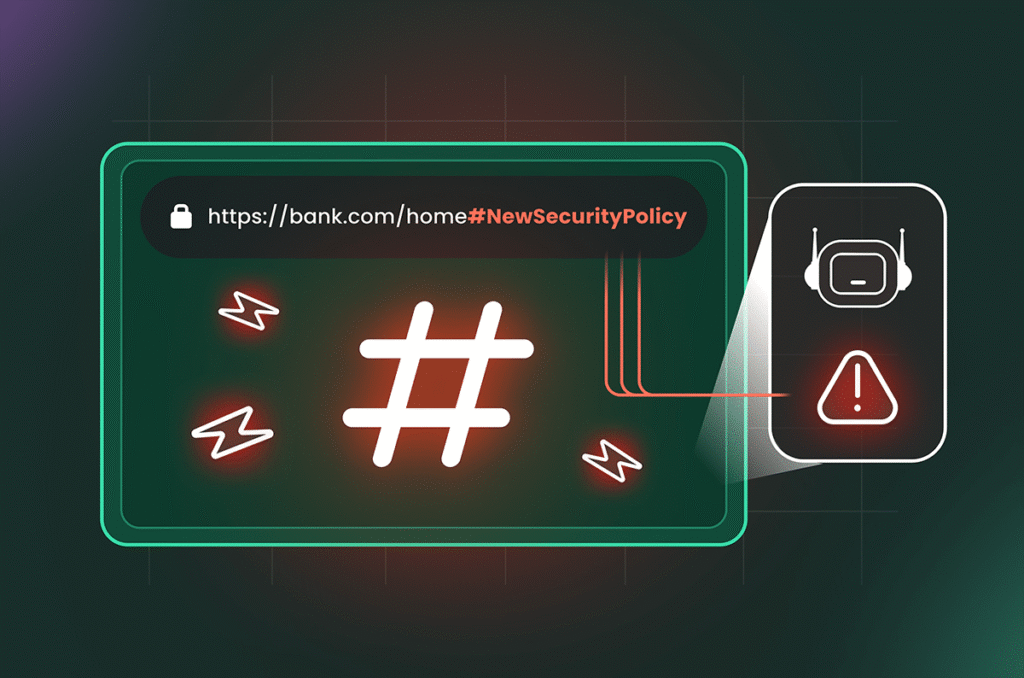

This technique represents a profound shift in the world of cybersecurity. It bypasses traditional defense mechanisms by exploiting a subtle design flaw embedded in how AI-powered browser assistants handle URL fragments. Instead of relying on malicious websites to trick users into downloading malware, HashJack leverages the inherent trust placed in legitimate sites.

Here’s how it works:

Imagine you visit a website that prompts you for your credentials through an interface designed for seamless user input. You enter your login details and hit “Submit”. The website sends these credentials to their backend servers, where a process begins that ultimately authenticates your account, granting you access to the specific resources and features on offer.

Now, think about this same scenario, except instead of entering credentials directly in the interface of the site, these data are embedded within the URL itself after the “#” symbol. That’s what HashJack does: it hides a malicious code snippet within a legitimate URL fragment, essentially creating an invisible pathway for attackers to manipulate user interaction and access sensitive information.

The attack chain works like this:

- A user visits a website with a hidden prompt containing malicious instructions after the ” #” symbol.

- The AI browser receives the complete URL, including the concealed fragment. This allows threat actors to inject their code into the browser’s request before the data reaches the backend server.

- Because URL fragments are often not logged by security software and intrusion detection systems, HashJack remains undetectable.

The consequences of this attack vector are dire:

HashJack grants attackers a vast array of possibilities that transcend traditional phishing techniques:

- Callback phishing: The AI assistant can mimic legitimate support numbers for malicious purposes.

- Data exfiltration: Attackers can leverage the agential capabilities of platforms like Perplexity’s Comet browser to transfer sensitive user data, even before the user is aware.

- Misinformation : Fabricated financial news and other misleading content are now possible through sophisticated AI-powered browser assistants, potentially leading to harmful consequences for individuals and organizations alike.

The response has been swift and effective:

Google and Microsoft recognized the severity of the problem and promptly addressed it by releasing patches within a relatively short timeframe. This quick response serves as a testament to the importance of addressing these issues proactively.

What’s next?

As AI-powered browsers continue to play an integral role in our daily lives, we can’t afford to overlook the risks posed by vulnerabilities like HashJack. It’s crucial that developers prioritize robust defensive measures and consider implementing proactive security protocols to safeguard against potential threats. The future of AI technology hinges on addressing these challenges head-on to ensure user trust remains intact and that its benefits are ultimately realized.